What do we do when AI puts us in competition with ourselves — our own knowledge, our own ideas, our own speed?

What is Innovation? Innovation is defined as “the action or process of innovating.” No help there.

What is Innovation? Innovation is defined as “the action or process of innovating.” No help there.

“Innovating” is defined as “make changes in something established, especially by introducing new methods, ideas, or products.” That helps, but just a little.

Because if true, the American Revolution was “innovative.” And so was your decision to have oatmeal instead of the usual eggs this morning.

Maybe the real question is: does any change to anything established qualify as innovation? If so, the term becomes meaningless.

Dictionary definitions fail because they treat innovation as a mechanical action. But innovation isn’t mechanical — it’s intentional. To communicate, we all have to agree on the meaning of a word. Which is difficult with such intangible ones like innovation.

Two articles in a recent issue of Quirks Marketing Review make heroic attempts to define innovation.

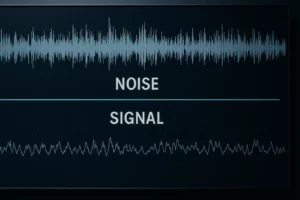

Turning generic GPTs into game-changing innovation by Eric Tayce uses 1,540 words to get his points across about innovation and uses the word 23 times, telling us among other things that, “AI models trained on publicly available data can only recombine what already exists. When inputs aren’t grounded in something unique, you don’t get innovation, you get noise.” In his piece you learn there are “innovation teams” and “innovation platforms” based on his singular premise that you can’t get innovation without supercharging “AI with what only you know.”

Equipping you and your organization for what’s next by Niels Neudecker uses the word “innovation” only twice, but spends 1,574 words to explain how to get it by telling us among other things: “Amid the race for more data, analytics and automation, insights organizations risk losing the human element of insight, grounded in context, meaning and institutional memory. When this happens, decision-making becomes reactive rather than strategic, missing critical opportunities for innovation.” His “innovation” was the term “thick data[1]” which, when layered with contextual market trends and cultural intelligence, “is the foundation of strategic insight, the kind that drives innovation and informs long-term planning.”

But if innovation is changing something that’s established with something new, none of what these two authors said addresses the implied problem: change for change sake isn’t always good, is it?

The word “innovation” itself is tossed around like a rubber ball these days (everywhere, not only in their pieces) and as a consequence loses its true meaning.

We are all innovators

If we follow the definition of changing something with something new, different as being innovative, every human being is an innovator. Unless you repeat your life on a loop, you innovate every day. Change is the only constant — which makes innovation a universal human act, not a corporate department.

However, combining innovation with AI – which is the basis of these two pieces and a growing concern across all disciplines (i.e., WSJ’s piece Tens of Thousands of White-Collar Jobs Are Disappearing as AI Starts to Bite documents the “leaner new normal for employment in the U.S. is emerging.) – and suddenly the point becomes obvious: AI can innovate faster than any human being can with ideas.

And that’s really what these authors and others are struggling with: how do you say innovative when this tool we call AI does it faster?

How do you add “insight value” if everyone has access to AI?

Bluntly, you bend AI to your will; you don’t bend to its will.

Bending AI’s Will

Tayce’s thesis is at the heart of bending AI to your will: add what you know to AI. He argues correctly that AI only knows what all of us can know – publicly available data. Therefore, AI can only combine what exists.

Tayce probably read The Signal and the Noise, Nate Silver’s terrific 2012 book, and uses noise to juxtapose how innovation gets interrupted. Silver himself wrote, “The problem begins when there are inaccuracies in our data.”

So while Tayce’s piece is excellent in helping understand the power and danger of AI and innovation, he never really defines what innovation is.

The evidence for this observation is in his opening statement, where most articles show their hand. He states, “The internet democratized information. And misinformation. Now AI is democratizing thinking.”

“Democratized” is the wrong word. “Democratized” means introducing a democratic system, a form of government where people hold power. The word Tayce should have used is exposed.

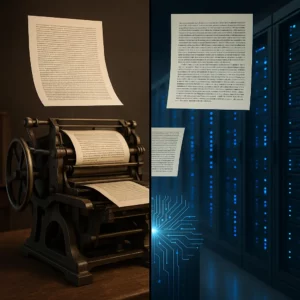

Think about what the printing press did for information, which is the same thing as the internet. People still had to have consumed the information; the printing press made it more accessible by exposing the ideas. People had to know how to read. Just like you need a computer for AI. And that includes being exposed to misinformation (whatever that is).

Think about what the printing press did for information, which is the same thing as the internet. People still had to have consumed the information; the printing press made it more accessible by exposing the ideas. People had to know how to read. Just like you need a computer for AI. And that includes being exposed to misinformation (whatever that is).

Every human does what AI does: gather information, combine it with other information, and then act or not act accordingly on results. Tayce says, “when inputs aren’t grounded in something unique, you don’t get innovation, you get noise.”

Nate Silver noted: “There isn’t any more truth in the world than there was before the Internet or the printing press. Most data is just noise, as most of the universe is filled with empty space.”

Which raises the real question: what is truly unique? How much of anyone’s thinking can be?

There’s nothing new under the sun is a common statement that is actually true, and Tayce’s piece is about how much “creative freedom to give AI” to get something new, something unique, something innovative.

The problem is, AI isn’t creative. AI doesn’t originate intention, judgment, value, or stakes; it recombines patterns. Innovation requires intention.

AI will tell you it’s not. And while Tayce’s article has the basis for approaching AI (assistant, collaborator, co-creator), it’s just really about using AI in your very own strategic planning session (remember those, where a bunch of executives got together to chart out course of action based on competitive analysis, positioning, etc.?).

AI accelerates thinking, but it does not originate it.

Is Insight Innovation?

Is Insight Innovation?

Neudecker’s article takes a slightly different turn, but nevertheless is about AI and innovation. Because of the “seismic shifts” in the insights world, “Insights teams must now show up as operational and strategic.” Insights teams is just another way of saying innovation teams. In other words be (as Tyne said) “proactive drivers of change.”

The piece is peppered with Neudecker’s own examples on doing this including moving away from “siloed, do-it-yourself research models toward more collaborative, integrated approaches.” But the problem here is while collaboration is a good thing, you don’t make money or gain insights with what everyone knows, but with things that no one else knows. Collaboration or “creativity by committee” often leads to what ad people call “a dog’s breakfast.”

Indeed, Neudecker points out “As organizations grow more data-rich they risk becoming insight-poor.” How can that be? Nate Silver offers this answer: “We share so much information that our independence is reduced.” Collaboration makes it easy to stop thinking for yourself, for bucking the system, for looking where others might not be looking. Sometimes it’s just nice to be in a room by yourself and as Dostoevsky said, “break things.”

Speed is King

AI is a speed demon, and that’s why Neudecker says “the real competitive advantage lies in accelerating the speed to action.” It’s one thing to think fast, but it’s another thing to act on what you thought about. That delay has to be overcome and change in marching orders from “ready, aim fire” to “ready, fire, aim and fire again.”

Marcus Luttrell in Lone Survivor wrote: “The SEALs place a premium on brute strength, but there’s an even bigger premium on speed.” It’s true in war, in business, and in AI it’s a strategic imperative.

The problem is organization mud: all companies get stuck in the mud of process, which used to be a big thing in the early 2000s. The study of process became so intense that people studied the process instead of doing things; we’re still paying the price for that detour in strategy thinking.

Neudecker says we have to reimagine “the role of the insights professional.” He notes these professionals “will be valued not just for their technical expertise but for their ability to connect the dots across “complex data ecosystems to eventually influence and inspire bold, confident actions.”

All well and good, but what if you can’t see the dots?

Awhile back my blog The Problem with Dots pointed out the problem with dots (because I am red-green color blind, I didn’t see those colors…imagine that for a guy in advertising). So, you have to be sure when you start connecting dots that you see all of them— or you get a really wrong picture.

Awhile back my blog The Problem with Dots pointed out the problem with dots (because I am red-green color blind, I didn’t see those colors…imagine that for a guy in advertising). So, you have to be sure when you start connecting dots that you see all of them— or you get a really wrong picture.

That’s why learning to look for patterns not just the dots makes more sense. People see things that they are used to and because they get used to it, see only the dots. But the world isn’t just comprised of dots. There are dashes too. Patterns require perspective; AI has computation, not perspective.

The bottom line? It’s not AI that is the innovator: it’s you. It’s not data that are the innovation: it’s the person seeing the data. Which brings us to the uncomfortable truth behind all of this: innovation has always been a human responsibility — not a machine’s.

The great science fiction movie Soylent Green ended with Charlton Heston screaming, “Soylent Green is people … it’s people!!!” There were so many people on earth, Heston discovered the truth about the popular food ration (it was made with people). In itself, that was an innovative way of feeding the population. In itself, perhaps that’s what AI is going to do. Maybe we should call AI information Soylent Data! I think I’ll trademark those words! Soylent Data™!

Let me hear from you!

____________________________________________

[1] The term “thick data” was popularized by technology ethnographer Tricia Wang, who used it in a 2013 article and subsequent 2016 TED Talk to advocate for qualitative, ethnographic approaches in the “Era of Big Data”